To help inform management decisions of different fisheries management strategies, SEAwise has employed a variety of simulation models. These models investigate and predict the biological, ecological, and economic trade-offs of different management strategies under a variety of environmental and economic scenarios. They range from relatively simple mixed fisheries models to more complex ecosystem models. Simulating any real life system entails some degree of uncertainty, and this typically increases with the complexity of the system being modelled. To ensure our models are informative and aid management decisions, quantifying their uncertainty and communicating these elements transparently is an integral part of SEAwise. Here, we outline how uncertainty has been accounted for in SEAwise models.

The level of uncertainty associated with a model output describes the degree to which we can be confident that the result is reflective of real world conditions and an accurate prediction of future scenarios. A result with a high level of uncertainty may indicate issues relating to our understanding of the processes involved or the data used, for example. In these instances, results should be interpreted alongside additional sources of evidence. Uncertainty can be introduced in a variety of ways, including on account of limitations surrounding data used to build a model, and the complexity of the interactions examined in the model.

SEAwise has worked to ensure that uncertainty is properly addressed in all models and communicated clearly. The models used in the project include simple biological process models through to complex end-to-end ecosystem models. One of the challenges within SEAwise is to make these models operational and, at the same time, informative to management decisions. Understanding the level of uncertainty around the outputs of these models and communicating this is essential to support their use.

In this SEAwise EBFM Tool, we have used shaded droplets to communicate the level of uncertainty associated with each output. SEAwise has developed models with active consideration of management needs and how model outputs will be used. Addressing sources of uncertainty and decision-makers’ tolerance to uncertainties are key concepts for SEAwise’s quality assurance processes. For more information about how SEAwise has estimated uncertainty and the predictive capability of models, please see our guidelines for the treatment of variance in forecasts, structural uncertainty, risk communication, and acceptable levels.

SEAwise defines three main sources of predictive uncertainty:

By including each of these uncertainties in a peer-reviewed framework that specifies acceptable risk levels, the predictive models used in SEAwise are able to balance model complexity to maximise predictive ability. This means that the models used integrate data and evidence from several sources to more accurately reflect real-world conditions while also delivering an output that is more likely to accurately predict future scenarios than a more simplistic model.

Data used in SEAwise are continuously screened for errors by expert teams to ensure reproducibility and reliability of results. Data and models analysed and applied in SEAwise undergo thorough quality assurance to ensure that model results are suited to the objectives of the analyses. Using high-quality data and undertaking this quality assurance process also ensures that results are robust – even when uncertainty arises in the development of the model.

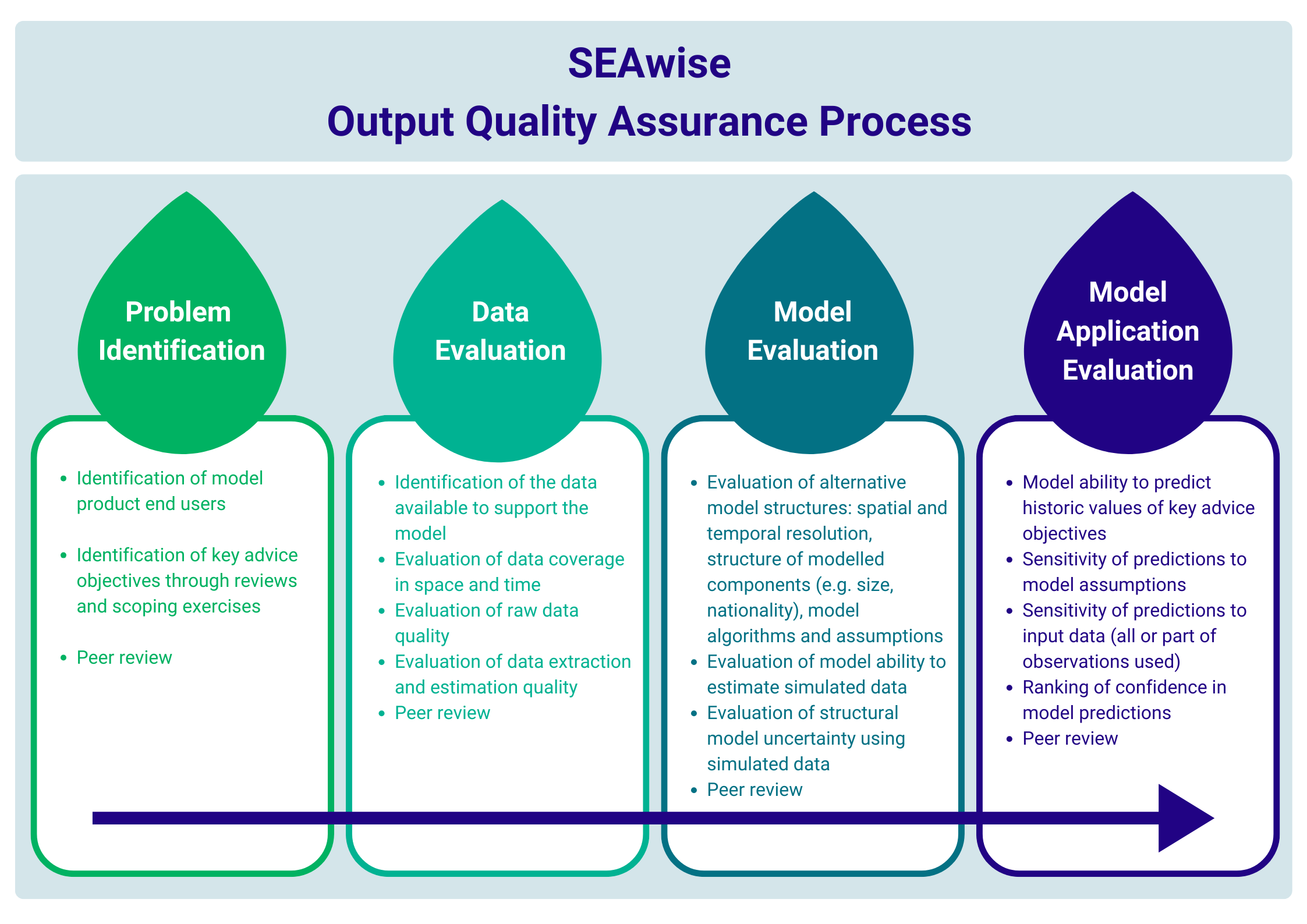

Quality assurance of our models includes a review of the data and model application against specific criteria (see the SEAwise Report on requirements for transparency and quality control for full details). SEAwise also works with target end users to ensure that model outputs, including levels of uncertainty, are easily understood and meets the expectations of intended users. The process has four steps:

This ensures that the models used are appropriate for the problem being investigated and is based on the results of systematic reviews and scoping exercises.

Data evaluation investigates the spatio-temporal coverage of data and the quality of raw and processed data for use in the model. Criteria include:

The model evaluation investigates different aspects of the model design to understand its predictive ability. It also assesses the model’s ability to estimate simulated data.

Under the model application evaluation, the ability of models to predict observed data and to predict sensible future development from these data is evaluated.

All models and their associated data are reviewed by external experts at ICES, in addition to review through internal SEAwise workshops.

Clear communication about the assumptions, outcomes, and limitations of the models is essential to SEAwise’s quality assurance. High-quality data is essential for making informed decisions, enhancing user experience, and maintaining the integrity of the application. Together, this ensures results from the SEAwise Tool are reliable, accurate, and usable. A set of guidelines for the treatment and communication of uncertainty forms the structure of the SEAwise quality assurance process, as outlined in the figure opposite.

To maintain data quality, regular audits and validations, along with automated checks and balances are undertaken. Robust security measures are also used to protect sensitive information, including provision of appropriate access controls to authorised users.

For more information on SEAwise’s work please visit the project website: seawiseproject.org.

For more information on the processes discussed here explore these key documents:

Stay up to date with SEAwise news and research, hear about upcoming events, and receive updates on fisheries news from across the European seascape.